Image byGetty Images/Futurism

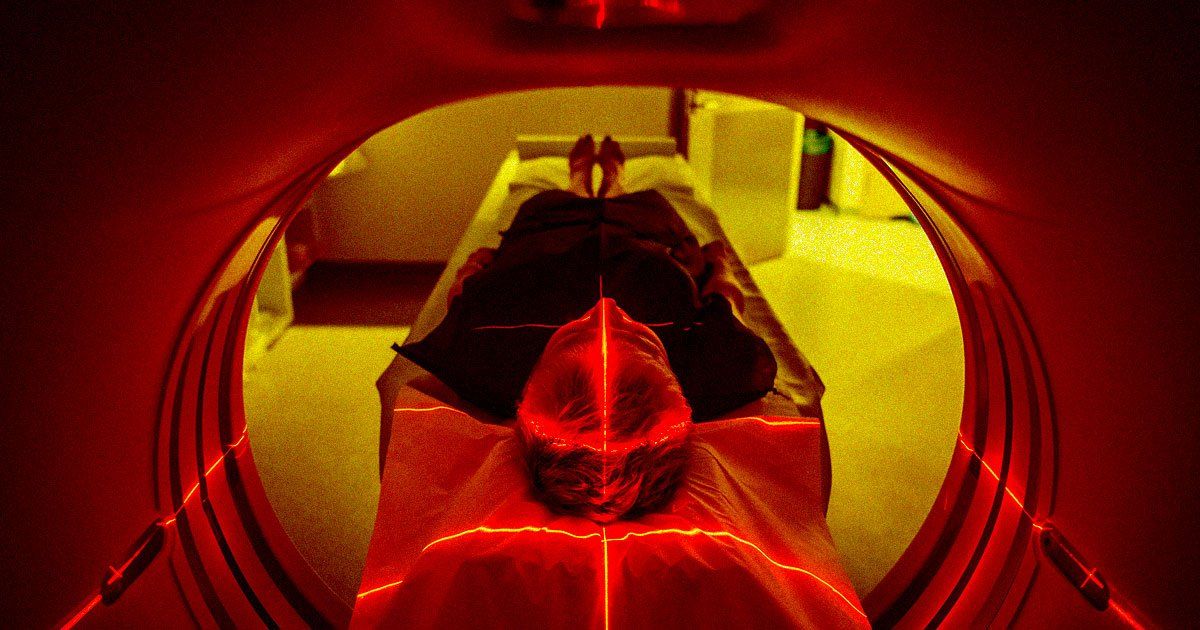

Researchers at the University of Texas claim to have built a “decoder” algorithm that can reconstruct what somebody is thinking just by monitoring their brain activity using an ordinary fMRI scanner, The Scientist reports.

The yet-to-be-peer-reviewed research could lay the groundwork for much more capable brain-computer interfaces designed to better help those can’t speak or type.

In an experiment, the researchers used MRI machines to measure the changes in blood flow — not the firing of individual neurons, which is infamously “noisy” and difficult to decrypt — to decode the broader sentiment or semantics of what three study subjects were thinking while listening to 16 hours of podcasts and radio stories.

They used this data to train an algorithm that they say can associate these blood flow changes with what the subjects were currently listening to.

The results were promising, with the decoder being able to deduce meaning “pretty well,” as University of Texas neuroscientist and coauthor Alexander Huth told The Scientist.

However, the system had some shortcomings. For instance, the decoder often mixed up who said what in the radio and podcast recordings. In other words, the algorithm “knows what’s happening pretty accurately, but not who is doing the things, ” Huth explained.

The algorithm was also not able to use what it had learned from one participant’s brain scans semantics and apply that to another’s scans, intriguingly.

Despite these shortcomings, the decoder was even able to deduce a story when participants watched a silent film, meaning that it’s not limited to spoken language, either. That suggests these findings could also help us understand the functions of different regions of the brain and how they overlap in making sense of the world.

Other neuroscientists, who were not directly involved, were impressed. Sam Nastase, a researcher and lecturer at the Princeton Neuroscience Institute, called the research “mind blowing,” telling The Scientist that “if you have a smart enough modeling framework, you can actually pull out a surprising amount of information” from these kinds of recordings.

Yukiyasu Kamitani, a computational neuroscientist at Kyoto University, agreed, telling The Scientist that the study “sets a solid ground for [brain-computer interface] applications.”

READ MORE:

Researchers Report Decoding Thoughts from fMRI Data

[ The Scientist

]

More on reading brains: Neuralink Cofounder Leaves as Brain Company Descends Into Chaos